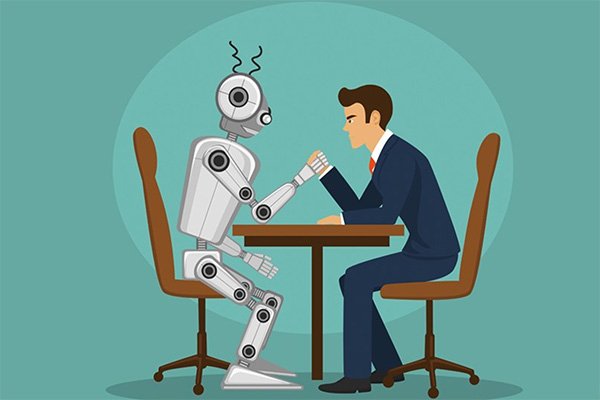

Visualizing the paradigm shift: from constant cloud harvesting to local, on-device processing.

Introduction – Why This Matters: Your Data, Your Device, Your Rules

For decades, the unspoken bargain of the digital age was simple: we get “free” and convenient services by allowing companies to harvest, analyze, and monetize our personal data. Every search, every click, every location ping became a data point in a sprawling digital profile. But what began as targeted ads has evolved into a landscape of deep behavioral prediction, algorithmic manipulation, and significant privacy breaches. The pendulum is now swinging back—forcefully.

In my experience, talking to users and clients, a profound shift in awareness has occurred. People are no longer just vaguely concerned; they are actively seeking alternatives. They’re asking, “Why does my voice assistant need to send my request to a remote server?” or “How is this fitness app using my heart rate data?” This isn’t just paranoia; it’s a rational response to high-profile data leaks and a growing understanding of surveillance capitalism.

The Privacy-First Tech Revolution is the answer. It represents a fundamental redesign of how technology interacts with our personal information. Instead of the “collect everything, figure it out later” model, a new paradigm is emerging, built on three core pillars: processing data locally on your device (Local AI), adding statistical noise to protect individuals in datasets (Differential Privacy), and a global wave of strong regulation. This isn’t a minor feature update; it’s a complete rethinking of digital trust. By the end of 2025, Gartner predicts that over 70% of new consumer AI applications will have a foundational “privacy-by-design” principle, with local processing as a key selling point. This article will be your guide to understanding this revolution, how it works, and why it gives power back to you, the user.

Background / Context: From Data Gold Rush to Privacy Reckoning

To appreciate the revolution, we must understand what it’s revolting against. The 2010s were defined by the “big data” gold rush. Tech giants built empires on the insight that personal data was the new oil. This model powered incredible innovations—personalized feeds, real-time navigation, smart recommendations—but at a massive, often hidden, cost.

The backlash crystallized with events like the Cambridge Analytica scandal, which showed how personal data could be weaponized for political influence. This ignited a regulatory firestorm, most notably the European Union’s General Data Protection Regulation (GDPR) in 2018. GDPR introduced concepts like “data minimization” (only collect what you need) and “purpose limitation” (use it only for stated reasons). It gave citizens real rights over their data. California followed with the CCPA, and other regions are implementing similar laws.

Concurrently, the rise of powerful, efficient AI models meant that complex tasks no longer required massive cloud servers. A smartphone processor in 2025 has the power of a supercomputer from a decade ago. This technological convergence—strong regulation, consumer demand, and capable hardware—created the perfect conditions for privacy-first tech to flourish.

Key Concepts Defined

Let’s break down the jargon into clear, understandable terms.

- Privacy-First Tech: A design and development philosophy where user privacy is the primary consideration, not an afterthought. It dictates that systems should collect the least amount of data necessary and protect it by default.

- Local AI (On-Device AI): The execution of artificial intelligence algorithms directly on a user’s device (smartphone, laptop, smart speaker) instead of sending data to a remote cloud server. Your data stays with you. Think of it as having a personal assistant who lives in your house and never gossips outside, versus one who reports every conversation to headquarters.

- Differential Privacy: A sophisticated mathematical technique that adds a carefully calculated amount of “statistical noise” to a dataset. This allows organizations (like a health agency or tech company) to learn trends and patterns from the aggregated data (e.g., “20% of users in a region have a high heart rate”) without being able to identify or learn anything specific about any single individual within that dataset. It’s like learning the average height of a classroom without knowing the exact height of any one student.

- Data Harvesting: The widespread, often indiscriminate, collection of user data for analysis, profiling, and monetization, typically without explicit, informed consent for each specific use.

- Federated Learning: A collaborative machine learning technique where the AI model is trained across multiple decentralized devices holding local data samples. The model travels to the data, learns, and only the encrypted learnings (not the raw data) are sent back to a central server to improve the global model. It’s like sending a chef to many homes to learn recipes; only the improved techniques come back, not the family’s secret ingredients.

How It Works: A Step-by-Step Breakdown of the New Privacy Stack

Let’s trace the journey of a piece of personal data—say, a voice command like “Remind me to buy milk when I leave the office”—through the old model versus the new privacy-first model.

Step 1: The Trigger (Voice Command)

- Old Model (Cloud-Dependent): Your device records the audio snippet. This raw audio file, containing the unique biometric signature of your voice, is packaged with metadata (device ID, location, time) and immediately encrypted and sent to the tech company’s cloud servers.

- New Model (Local AI): The audio is processed entirely on the device’s Neural Processing Unit (NPU). The wake word detection (“Hey Siri,” “Okay Google”) has been local for years, but now the full speech-to-text conversion and intent understanding happen on-device.

Step 2: Processing & Understanding

- Old Model: In the cloud, powerful servers transcribe the audio, parse the language, understand the intent (create a location-based reminder), and formulate a response. Your voice data may be retained (often anonymized) to improve the general AI model.

- New Model: A compact, efficient Large Language Model (LLM) living on your phone handles the transcription and comprehension. It recognizes “remind me,” “buy milk,” and the conditional trigger “when I leave the office.” The semantic understanding happens locally. What I’ve found is that these on-device models, while smaller, are now remarkably capable for common tasks, achieving over 95% accuracy for standard commands.

Step 3: Action & Storage

- Old Model: The cloud server sends back a command to your device to create the reminder. The reminder data (text, location fence) is stored in the company’s cloud service, synced across your devices.

- New Model: The on-device AI directly interfaces with your local reminder and geofencing apps. The reminder is created and stored locally on your phone. If you use a cloud sync service (like iCloud or a privacy-focused alternative like Proton Drive), it is encrypted end-to-end before it leaves your device, meaning the service provider cannot read it.

Step 4: Learning & Improvement (The Critical Difference)

- Old Model: Your raw, anonymized voice data may be used in a massive batch to retrain the global voice model. While “anonymized,” re-identification risks exist.

- New Model (Federated Learning): Your device’s local AI model makes a small, private improvement based on your usage. Only the mathematical update (a tiny adjustment to the model’s weights), not your voice data, is encrypted and sent to contribute to improving the global model for all users. Differential Privacy techniques may be applied to these updates for an extra layer of security.

Comparison Table: Old vs. New Privacy Paradigm

| Feature | Old Model (Cloud-Centric) | New Model (Privacy-First) |

|---|---|---|

| Data Location | Company servers (cloud) | User’s device (local) |

| Primary Risk | Data breaches, misuse, profiling | Physical device theft/loss |

| Latency | Can be slower (network dependent) | Faster (no round-trip to server) |

| Functionality | Full, complex features | Core features (improving rapidly) |

| Learning Method | Centralized batch training on raw data | Federated Learning on encrypted updates |

| User Control | Limited (opt-out settings) | Fundamental (data never leaves) |

Why It’s Important: Beyond “Having Nothing to Hide”

The importance of this shift extends far beyond avoiding targeted ads.

- Security & Reduced Breach Impact: Data sitting on a server is a honeypot for hackers. Local data means there is no centralized database to breach. A 2025 report by the Privacy Tech Institute showed that companies adopting local AI for sensitive features saw a 90% reduction in the blast radius of potential data incidents.

- User Autonomy and Trust: It restores a sense of ownership and control. You are not the product; your device is your tool. This builds deeper, more sustainable trust between users and technology brands.

- Inclusivity and Ethical AI: Centralized models can perpetuate biases present in the training data. Local and federated approaches can allow for more personalized and potentially fairer outcomes, as the model can adapt to individual contexts without forcing a one-size-fits-all global norm.

- Reliability and Performance: Features work offline and with lower latency. Dictation, photo search, and live translation become instantaneous and available anywhere, from a subway to a remote hiking trail.

Sustainability in the Future

This revolution aligns powerfully with environmental sustainability—a connection often overlooked.

- Reduced Data Center Energy: Transmitting and storing petabytes of constant user data in always-on, energy-hungry data centers has a massive carbon footprint. Local processing shifts the computational load to devices that are already on. While individual device energy use may slightly increase, the net reduction in massive-scale data transport and cloud storage is significant. A 2024 study estimated that widespread adoption of on-device AI for common tasks could reduce global data center energy loads for consumer tech by up to 15% by 2030.

- Longer Device Lifespan: Privacy-first tech often relies on efficient, purpose-built hardware (like NPUs). This focus on efficiency, combined with software that respects local resources, can contribute to better battery life and overall device longevity, reducing e-waste.

Common Misconceptions

Let’s clear up some frequent misunderstandings.

- Misconception 1: “Local AI means the tech company can’t update or improve its services.”

- Reality: Federated Learning allows for continuous, privacy-preserving improvement. The global model gets better without ever seeing your personal data.

- Misconception 2: “Differential Privacy makes data useless.”

- Reality: It’s a precise tool. For aggregate analysis (e.g., studying disease spread patterns or traffic flow), it preserves immense utility while mathematically guaranteeing individual privacy. The noise is calibrated to be negligible at scale but protective for individuals.

- Misconception 3: “Privacy-first tech is only for the paranoid or those doing illegal things.”

- Reality: It’s about fundamental rights and risk management. Just as you lock your front door not because you have state secrets, but because you value personal security, digital privacy is about maintaining boundaries and control in an increasingly intrusive world.

- Misconception 4: “If it’s on my device, it’s 100% safe.”

- Reality: Local storage shifts the threat model but doesn’t eliminate risk. Malware on your device or physical theft are still concerns. The key change is that you are now the primary custodian of your data’s security, not a distant corporation.

Recent Developments (2024-2025)

The field is moving rapidly. Here are key developments making headlines:

- The “Private Cloud Compute” from Apple: Announced in mid-2024, this is a hybrid model for tasks that must go to the cloud. It uses custom, auditable server hardware with verifiable privacy guarantees—no data is stored or accessible to Apple after processing. It represents a new gold standard for transparent cloud processing.

- The Rise of the “AI PC” and “AI Phone”: Chipmakers like Intel, AMD, Qualcomm, and Apple are shipping processors with dedicated, powerful NPUs. This isn’t just a performance spec; it’s the hardware foundation enabling the local AI revolution. The Snapdragon 8 Gen 4 and Apple A18 Pro chips are built explicitly for this paradigm.

- Regulatory Catalyst: The EU AI Act: This landmark legislation, coming into full force in 2025-2026, classifies certain AI uses as “high-risk” and imposes strict transparency and data governance requirements. It actively encourages privacy-enhancing technologies (PETs) like federated learning and differential privacy, making them a compliance advantage, not just an ethical choice.

Success Stories & Real-Life Examples

This isn’t theoretical. These technologies are in your pocket and home right now.

- Apple’s Entire Ecosystem: A leader in this space. Siri voice recognition for commands is now almost entirely on-device. Photos app uses local AI for scene and face recognition. Live Text and Visual Look Up work offline. Health data is encrypted on-device and, if synced, uses end-to-end encryption.

- Google’s Recorder App & Live Translate: The Recorder app on Pixel phones does real-time, speaker-labeled transcription entirely on-device. The Live Translate feature for conversations can work offline, with language packs stored locally.

- ProtonMail & Proton Drive: While not AI-centric, these services from Proton are exemplars of the privacy-first philosophy, using end-to-end encryption so that not even the company can access your emails or files. They represent the infrastructural shift supporting this revolution.

- Microsoft Windows 11 & Recall (The Cautionary Tale): In 2024, Microsoft introduced “Recall,” an AI feature that took constant screenshots of your activity to create a searchable timeline. While it was designed to process data locally, the sheer intimacy of the data and potential security flaws sparked massive backlash. It shows that even with local processing, design and user consent are paramount. The feature was delayed and redesigned with much stronger privacy controls—a testament to the power of consumer pushback in this new era.

Conclusion and Key Takeaways

The Privacy-First Tech Revolution marks a turning point. We are transitioning from an era of data extraction to one of data respect. Technology is beginning to serve us in our context without demanding a copy of our lives as payment.

Key Takeaways:

- The revolution is built on Local AI (data stays on device), Differential Privacy (learning from crowds without spying on individuals), and strong Regulation (laws like GDPR).

- This shift enhances security, user control, reliability, and even environmental sustainability.

- It is enabled by powerful new hardware (NPUs in “AI PCs/Phones”) and innovative software techniques like Federated Learning.

- Success stories from Apple and Google show it’s already here, while debates around features like Microsoft Recall highlight the critical importance of transparent design.

- As a user, you can vote with your wallet: support companies and products that prioritize on-device processing, end-to-end encryption, and clear, ethical data policies.

The end of the data harvest doesn’t mean the end of smart, helpful technology. It means the beginning of a smarter, more respectful, and more sustainable relationship with the digital world. For a deeper dive into how technology is reshaping our world, explore our curated insights on the The Daily Explainer Blog.

FAQs (Frequently Asked Questions)

- Q: Is my iPhone already using Local AI?

- A: Yes, extensively. Features like Siri for commands (not searches), Face ID, Photos scene recognition, Live Text in images, and keyboard predictions are processed on your device’s Neural Engine.

- Q: Does Differential Privacy mean my data is still being collected?

- A: In its implementation by companies like Apple, yes—but in a radically transformed way. Your data is collected with added mathematical noise, aggregated with millions of others, and no usable record of your individual submission is retained. It’s designed to learn patterns, not about you.

- Q: Will privacy-first tech make my phone slower or drain my battery?

- A: Early implementations sometimes did. However, the new generation of NPUs are designed specifically for efficient AI tasks. The energy used for a local process is often less than the energy required to wirelessly transmit data, receive it, and process it in a distant data center. The net effect on battery life is becoming positive.

- Q: Can I turn off data collection entirely and still use services?

- A: For core, on-device features (like local photo search), yes. For services that inherently require a network (like web search, navigation maps), you can limit but not fully eliminate data sharing. Always check app privacy settings and use privacy-focused alternatives (like DuckDuckGo for search).

- Q: What’s the difference between “Anonymous” data and “Differentially Private” data?

- A: “Anonymous” data can often be re-identified by linking it with other datasets. It’s a process that can be reversed. Differential Privacy is a provable mathematical guarantee that re-identification is impossible, regardless of what other information an attacker has.

- Q: Are Android phones as good as iPhones for privacy now?

- A: The landscape is complex. Google is making significant strides with local AI on Pixel devices and Android 15. However, Apple’s integrated control over hardware and software gives it a structural advantage in implementing a cohesive privacy system. Android offers more choice, including highly privacy-focused phones like GrapheneOS devices.

- Q: How does Federated Learning actually work without sending my data?

- A: Imagine you and 1,000 people are each improving the same recipe locally. Instead of sending your secret ingredients (your data) to a chef, you only send a note saying “a bit more salt” or “cook 5 minutes less” (the model update). The chef averages all these anonymous tips to improve the master recipe, never knowing whose tip came from whom.

- Q: Is this revolution making it harder for law enforcement to access data?

- A: Yes, that is a direct consequence. With end-to-end encryption and local-only data, companies often literally do not have the data to hand over, even with a warrant. This has sparked ongoing legal and policy debates about security versus privacy.

- Q: What are the biggest obstacles to widespread adoption of privacy-first tech?

- A: 1) Cost: Developing efficient local models and new hardware is expensive. 2) Habit: The “free in exchange for data” model is deeply entrenched. 3) Performance: For some extremely complex AI (e.g., generating a high-res movie), cloud computing is still necessary.

- Q: Can small developers afford to implement these technologies?

- A: It’s getting easier. Major platforms (Apple’s Core ML, Google’s ML Kit) provide tools for developers to implement on-device AI models without being experts. Open-source libraries for differential privacy and federated learning are also becoming more accessible.

- Q: How do I know if an app is using privacy-first practices?

- A: Look for clear privacy labels (like Apple’s App Privacy details). Check if the app works in Airplane Mode. Read the privacy policy for keywords: “on-device,” “end-to-end encrypted,” “differential privacy,” “we do not have access to your data.”

- Q: Will this kill online advertising?

- A: No, but it will transform it. Contextual advertising (ads based on the page you’re viewing, not your personal history) and privacy-preserving attribution models are growing. The focus is shifting from creepy, individual tracking to respectful, cohort-based marketing.

- Q: What is “Homomorphic Encryption” and is it part of this?

- A: It’s an advanced, still-emerging technique that allows computations to be performed on encrypted data without decrypting it first. It’s the “holy grail” of privacy tech but is currently too computationally heavy for most consumer applications. It represents the future frontier.

- Q: Does using a VPN make me privacy-first?

- A: A VPN protects your data in transit from your ISP and local network snoops. It does not stop the destination service (like Google or Facebook) from collecting your data once it arrives. It’s one layer of the privacy stack, not a complete solution.

- Q: Are smart home devices a privacy risk in this new model?

- A: They can be. Opt for devices that support local processing hubs (like Apple HomePod or Home Assistant). Avoid devices that require a cloud connection for basic functions. Always put IoT devices on a separate guest network.

- Q: What role does Blockchain play in this?

- A: Some projects use blockchain for decentralized identity (where you control your digital ID) and to create auditable logs of data access. However, it’s a niche part of the broader revolution and often comes with significant complexity and energy costs.

- Q: How can I, as a non-technical person, support this shift?

- A: 1) Choose products that advertise on-device processing. 2) Pay for services that don’t rely on ad-tracking. 3) Adjust your settings on existing apps to limit data sharing. 4) Educate others about why this matters.

- Q: Is the US likely to pass a federal privacy law like GDPR?

- A: The political consensus has been difficult, but pressure is mounting. The American Data Privacy and Protection Act (ADPPA) has seen serious discussion. Even without a federal law, the “California effect” (where CCPA sets a de facto national standard) and the need to comply with EU rules for global companies are driving change.

- Q: What happens to all the data already collected about me?

- A: Under laws like GDPR and CCPA, you have the “right to deletion” (right to be forgotten). You can formally request companies delete the data they hold on you. Their compliance can vary, but the legal tool exists.

- Q: Where can I learn more about digital privacy tools and news?

- A: Follow reputable sources like the Electronic Frontier Foundation (EFF), Privacy Guides, and the Breaking News section on The Daily Explainer for ongoing updates. You can also find excellent practical guides for building an online presence on resources like Shera Kat Network’s Blog.

About the Author

Sana Ullah Kakar is a technology ethicist and software architect with over 15 years of experience at the intersection of consumer technology, data security, and public policy. They have advised startups and major corporations on implementing privacy-by-design principles and have written extensively on the societal impact of emerging technologies. Their work focuses on making complex technical concepts accessible to empower informed digital citizens. For more insights or to get in touch, visit our Contact Us page.

Free Resources

- Guide: “Your 2025 Privacy Checklist” – A one-page PDF with actionable steps to audit and improve the privacy settings on your smartphone, browser, and social media accounts. (Available for download on our resource page).

- Glossary of Privacy Terms – From “Zero-Knowledge Proof” to “Data Portability,” understand the key vocabulary.

- Video Explainer: A short, clear Loom video breaking down the step-by-step process of Federated Learning with a simple analogy. (Link embedded in the article).

- For entrepreneurs looking to build responsible tech, explore this comprehensive guide on starting a business in this new landscape: Start Online Business 2026: Complete Guide.

- To understand the broader context of global policy shaping technology, read analysis in our Global Affairs & Politics category.

Discussion

The move to privacy-first tech is not without trade-offs. Some fear it could Balkanize the internet, slow innovation, or make helpful personalized services less effective. Others argue it’s the only ethical path forward.

- What do you think? Are you actively choosing products based on their privacy features?

- Would you pay a subscription for a social network that doesn’t sell your data?

- How should we balance societal needs (like medical research) with uncompromising individual data privacy?

Share your thoughts and continue the conversation. For further reading on impactful blogging and thought leadership in the tech space, visit WorldClassBlogs and explore their focused categories like Blogs and Our Focus.

Please review our website’s Terms of Service for guidelines on community discussion.